Unacademy has a lot of textual data available. Some of the data is in the form of notes and class presentations, student chat data, quizzes, and test series question-answer sets. Also, text data has multi-lingual and cross-lingual content along with transliterated text covering more than 10 major Indian languages.

Based on each type of specific data mentioned above we can realize multiple NLP-related projects. A few of them are - Chat Priority Detection, Question Difficulty Estimation, Similar/Duplicate Question Detection, Ed-Tech Entity Recognition (NER task), Text/Course quality evaluation.

The above problems can be approached to solve using traditional NLP techniques - words and token-based classifiers, Tf-IDF, and word2vec/doc2vec. But it has been proven over the last few years that using pretrained models, trained over the large corpus and then fine-tuned for specific use-case .. yields the best results.

We encourage you to check out how NLP evolved over the years from simple statistical approaches to transformers here. Also, you can check GLUE benchmarks and leaderboards.

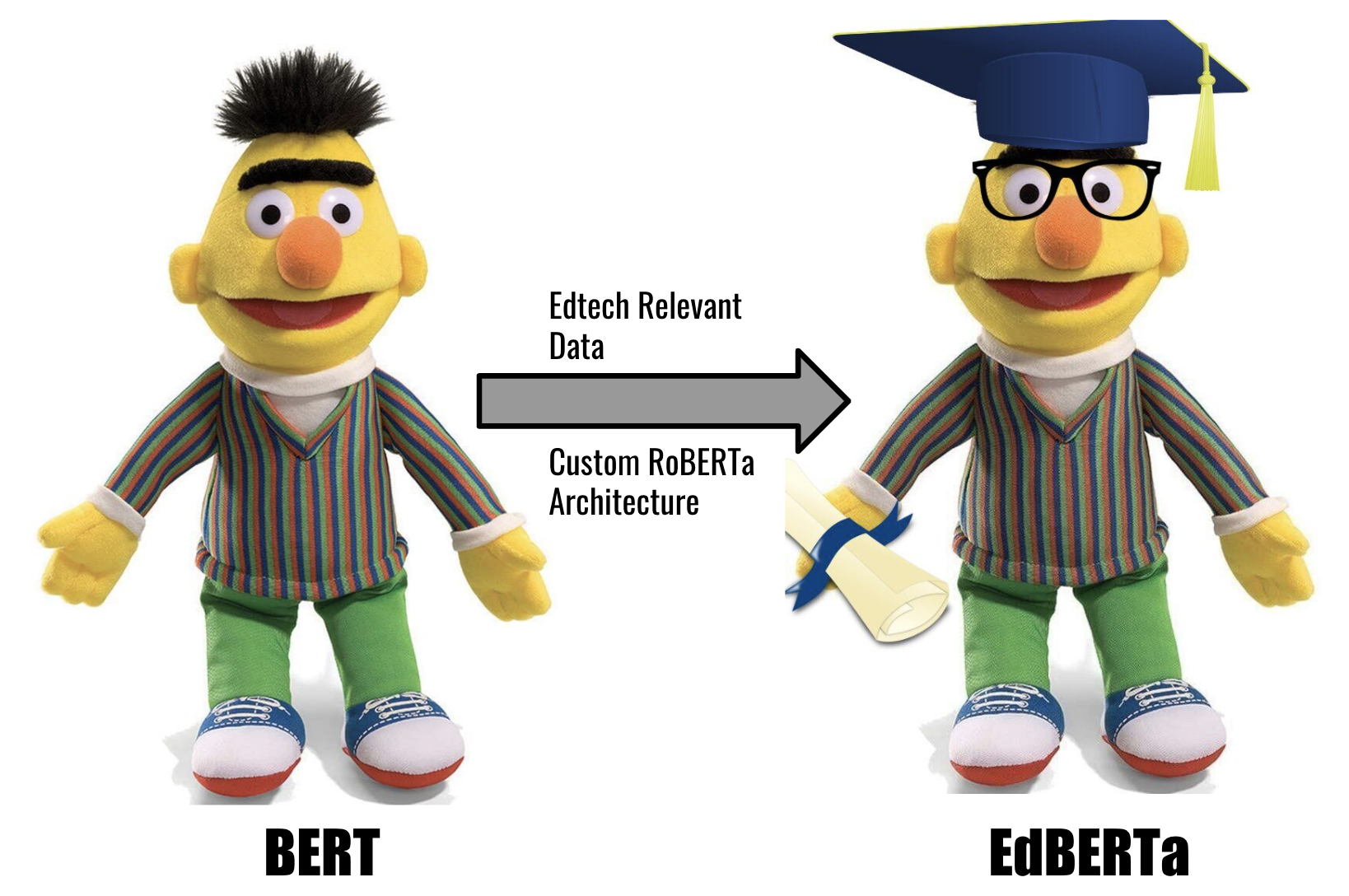

We could directly use some of these pre-trained multilingual models like BERT, RoBERTa, ALBERT, and fine-tune them to our tasks and train models. But what if we could pre-train our own model and use it instead of these generic language models?

Train from scratch .. not fine-tune?

We got inspiration from some of the BERT variations ... CamemBERT(state-of-the-art language model for French), Med-BERT(model pre-trained on a structured EHR dataset of 28,490,650 patients), and TaBERT(pre-trained to learn representations for tabular data).

At Unacademy, we have a good amount of text data. Gigabytes of text data, billions of words/tokens, chats, transliterated text, and code-mix quizzes, etc. So instead of fine-tuning, it made sense to pre-train in our case.

Indian language contribution in data on which SOTA models are trained is less than 5%. So did not make sense to fine-tune these models. In future iterations, we could pick up MuRIL, IndicBERT like models to fine-tune instead of training from scratch .. as it might reduce the training period.

Also, we customized the architecture to get faster inference instead of using given base architectures.

Data Collection

We collected text-related data from the Unacademy platform. Quizzes, QnA, Course content and notes, live class chats, etc. There was no need for external text data to be collected .. but we chose to include some data as shown below -

We intentionally skipped Wikipedia & Reddit data .. as we already accumulated many GBs of domain-specific data and we also wanted to experiment quickly as possible.

Once we collected all the data needed, we lowercased all text. Made sense in our case as chat data is mostly lowercased. Books converted to pages and then to paragraphs. We also kept all characters as they cover many math symbols, emojis, etc.

At this point, we had a text file with >200 million lines and >5 billion words to train.

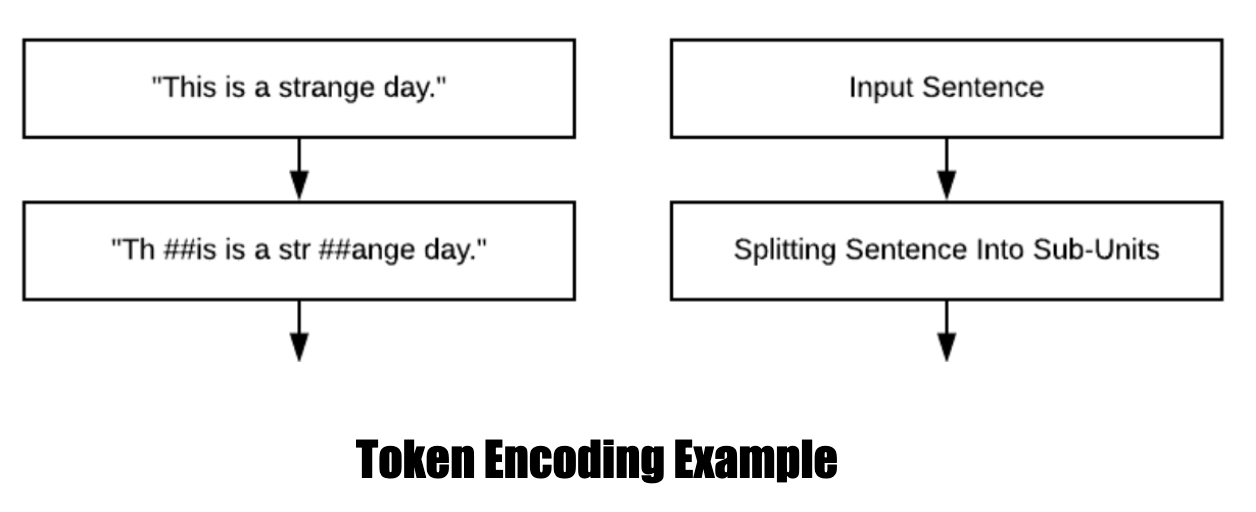

Tokenizer Training

As we have domain-specific data, we opted to train our own sub-word tokenizer instead of using other tokenizers from BERT or GPT. We chose to train the tokenizer with SentencePiece. They support both byte-pair-encoding (BPE) and unigram language model (ULM). To understand more about tokenization & why it is required, check this article.

The installation and training process is very simple and explained here. We used the following command to train the model. Here, input is a training text file. We chose a larger vocab_size of 96k with the hope that a large vocabulary might cover some or all EdTech specific token patterns.

$ spm_train --input=train.txt --model_prefix=edbpe --vocab_size=96000 --character_coverage=1.0 --model_type=bpe

$ spm_encode --model=edbpe.model --output_format=piece < train.txt > train.bpe

We got two files edbpe.model and edbpe.vocab and we created token-encoded-text files using these model files. We also set aside ~1million lines of test and validation files.

At this point, we had text files with >12 billion tokens to train.

Training Using Fairseq

Fairseq is a sequence modeling toolkit mostly used by researchers in NLP & Speech domains. It is being actively supported by Facebook's AI Research team and is very underrated as most of the time HuggingFace is being preferred.

We chose Fairseq over HuggingFace mostly because of previous good experience working with it. Also, it provides extensive options to fine-tune what you want to train, add/remove architecture code easily, and also supports easy fine-tuning of tasks using command line & python API.

The complete training procedure is explained here in this custom roberta training tutorial. We used the roberta-base architecture but customized it to have faster inference but good enough performance. We created 3 variations with different sets of parameters to verify various models and their performances. The final chosen parameters and command look like below. We chose just 4 encoder-layers and 16 attention-heads and used --fp16 for faster, efficient training.

$ fairseq-train --fp16 $DATA_DIR --task masked_lm --criterion masked_lm --encoder-layers 4 --encoder-embed-dim 512 --encoder-ffn-embed-dim 2048 --encoder-attention-heads 16 --arch roberta_base --sample-break-mode complete --tokens-per-sample $TOKENS_PER_SAMPLE --optimizer adam --adam-betas '(0.9,0.98)' --adam-eps 1e-6 --clip-norm 0.0 --lr-scheduler polynomial_decay --lr $PEAK_LR --warmup-updates $WARMUP_UPDATES --total-num-update $TOTAL_UPDATES --dropout 0.1 --attention-dropout 0.1 --weight-decay 0.01 --batch-size $MAX_SENTENCES --update-freq $UPDATE_FREQ --max-update $TOTAL_UPDATES --log-format simple --log-interval 1 --skip-invalid-size-inputs-valid-testWe used Python 3.7, RTX 3090, Cuda 11, Nvidia NGC Container for Pytorch for training. It took us a total of 3 weeks to generate all 3 variations of the model. We stopped the training once we went below 4.5 perplexity. More details about perplexity can be found here.

Now our model is ready to use as it is and also for fine-tuning for further tasks.

### Load the model in fairseq

from fairseq.models.roberta import RobertaModel

edberta = RobertaModel.from_pretrained('/path/to/edberta_checkpoint_file/',

checkpoint_file='edberta_best.pt')

edberta.eval()

Designing our own tasks instead of GLUE

It is a good practice always to check the performance of a pre-trained model with downstream GLUE tasks. But in our case, we wanted to check how our model performs on EdTech related tasks .. not on traditional GLUE tasks.

So we created 3 specific tasks for downstream fine-tuning.

- Course Subject Classification - The task was to classify given book-text sentences into

Maths,Psychology,Physics,Chemistry,Geography,History. We decided thatF1will be the metric for evaluation for this task. - Chat Topic Categorisation - The task was to classify chat into

Maths,Physics,Chemistry,Geography,History,Greetings,Doubtsrelated chat classes. We again decided thatF1will be the metric for evaluation for this task. - Chat Clustering - The task was to cluster a set of chats and check cluster quality statistically. We defined our custom metric

MCDC (Mean Cosine Distance from Centroid)which exactly works as its name suggests.

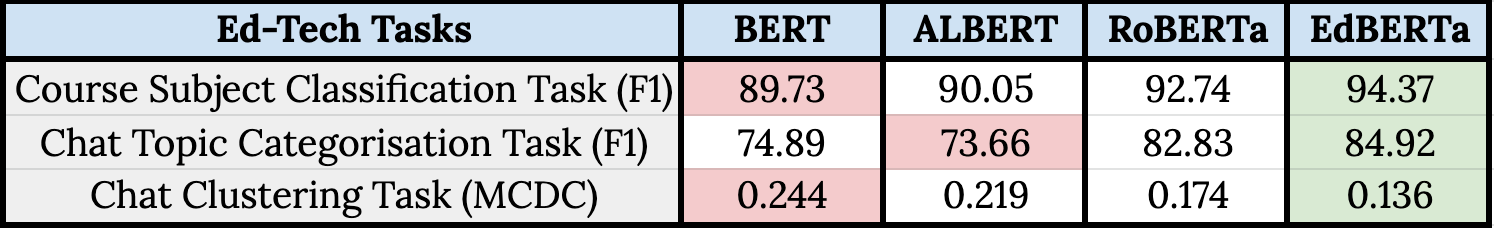

All collected training data for tasks was verified with tagging team and we tried to keep it as accurate as possible. We fine-tuned BERT, ALBERT, RoBERTa, and EdBERTa over these tasks which took a few hours to train.

Benchmarks

After fine-tuning and final inference results .. we compiled all tasks against their metric for each of these language models.

We got the following encouraging results for EdBERTa -

- Course Subject Classification → 5.17% improvement over

BERT - Chat Topic Categorisation → 13.26% improvement over

ALBERT - Chat Clustering → 28% improvement over

RoBERTa(less MCDC is preferred here .. also means tighter/closer cluster points)

All our defined tasks EdBERTa performed better than existing SOTA models.

It is very important to note that this doesn't prove our model is better than SOTA.

It just proves that for our use-cases and on our Ed-Tech data it performs better ... which is useful for us but may not work well with data or tasks in other domains like Health, News, E-Commerce, etc etc.

Final thoughts

In this article, we went through the process of creating a transformer-based language model from scratch and why we chose to do the same. This proof-of-concept again proves the fact that if you have a good amount of data and your domain is very niche ... pre-training should be done instead of just fine-tuning over SOTA NLP models.

This model could be a seed model for all our NLP tasks as explained in earlier sections. It is already showing good results in Question Difficulty Detection and Chat Priority Categorisation tasks where we could get better results quickly. Average inference times for 100 requests in parallel are ~12ms on CPU (for instances like c5.xlarge ) and ~4ms on GPU (for instances like g4dn.xlarge ) which is on par for many NLP APIs in terms of performance.