Our primary message broker for any inter-service asynchronous communication is AWS SQS. SQS being such a reliable cloud-native, hosted message broker that potentially gives us massive throughput with zero to no throttling, is why is our default choice of a message broker. In this essay, we would like to discuss a very efficient way to utilize SQS, which could well be applied to any message broker out there.

For some of our internal systems, we have to process ~5 million events every minute; and the core message broker that has to take this load is SQS. If we write 5 million events as 5 million messages in SQS the roundtrip network latency i.e. time taken to put all the messages in SQS would be very high.

If we even assume an average round trip latency of 10 milliseconds and send 5 million messages sequentially, it will take us close to 14 hours to write all the events, and to make things worse we are getting ~5 million events every minute, rendering this solution infeasible.

The next logical solution to this problem is batching and here if we utilize SQS's batch send API, which allows us to batch 10 messages in one call, we reduce the time taken to a tenth i.e ~1.4 hours close to 84 minutes - a massive improvement over the naive approach; but still far away from what we want to achieve.

To reap even more performance out of it, we piggybacked multiple events in one message and then send it in a batch. This seems a sound solution but we have to crunch some numbers so that we understand how many events can be put in a single SQS message.

As per AWS's documentation the maximum payload side an SQS message could have is 256KB. Each event of ours is rough ~1KB big, which means we can actually pack 256 events in one SQS message and make a batch call with 10 such messages, which then allows us to send 5 million messages in ~20 seconds. This sounds pretty awesome, but there is a catch ...

One API call, single or batch, to SQS, cannot have more than 256KB of data, which means we cannot get time to as low as 20 seconds with this approach, as our payload size would be 256 x 10 = 2560KB if we make a batch call of 10. This means the best we could get here ~200 seconds, which is still a massive improvement coming from 14 hours to 84 minutes to 200 seconds; but we needed to do better because we were getting an influx of 5 million messages every minute, so 200 seconds was way too long.

The improvement we have seen here looks awesome, but there is still some scope for improvement. If we closely observe, we found that we did improve on time by batching, but we never consider optimizing on data transfer; the amount of data transfer has remained fairly constant in our journey till now.

So, how can we squeeze more out of the existing situation? Enter Data Compression. To take our solution to the next level, we leverage Data Compression and pack more data in a single SQS message and thus bringing our time down even further.

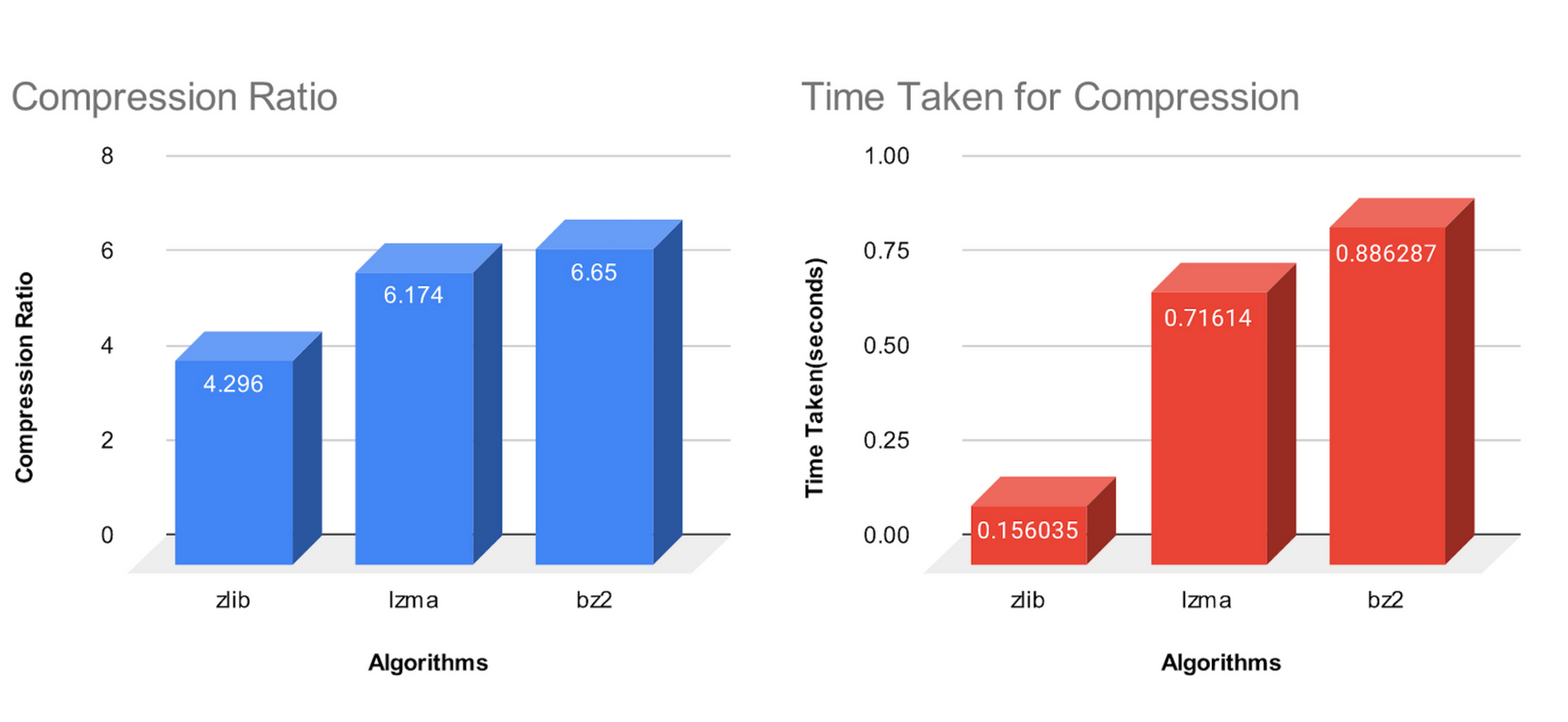

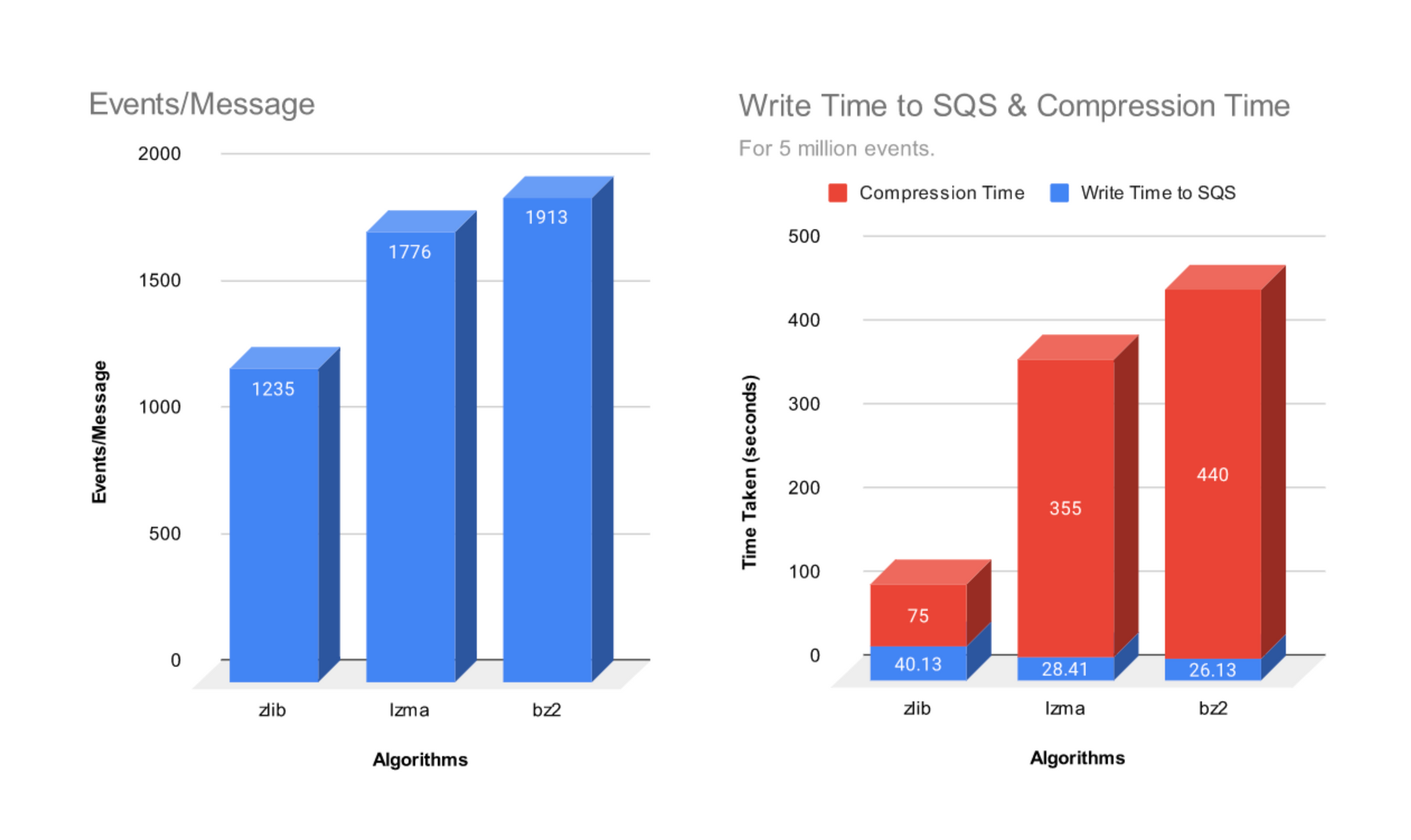

We did a comparative study of 3 of the most famous algorithms, zlib, lzma, and bz2, on our data and judged them on compression ratio and time to process.

It was evident from the study that there was a direct relation between compression ratio and processing time. The lower the compression ratio, the lesser the time is taken while the higher the compression ratio, the more time it took to process.

For most cases, sending messages to buffers/queues happens asynchronously, in which case compression ratio can be favored over compression time. With the unit event of 1 KB, we could fit 1913 events with bz2 algorithm in every message. However, for sending 5 million events, zlib is the fastest despite having the lowest compression ratio.

Note that the above results are in accordance with our sample payload. In real-world scenarios result may vary depending on payload structure and the machine on which compression is done. In our case, the unit event size was smaller, and with zlib we actually sent around 5k events in a single message.

Apart from saving network on both publisher and consumer, there is one more implicit advantage of sending data in bulk, which can be realized on the consumer side, the consumer saves all handshakes and consumer is readily warm, which is not the case if it had to consume 1 message at a time.

From a pricing perspective, SQS charges each 64 KB chunk of a payload as 1 request. Therefore, the more events we pack in a single message, the more transfer of data can be done per-unit pricing which translates into more data transferred per unit price.